Did you notice that the interactive Containers in FileMaker are really Webviewers?

Even a simple picture is shown using a Webviewer with an IMG tag:

<html style="height: 100%;">

<head>

<meta http-equiv="Content-Type" content="text/html; charset=utf-8">

<style>

body {background-color:rgba(100%,100%,100%,1);}

</style>

</head>

<body style="border:0; margin:0; padding:0; overflow: hidden; height: 100%; ">

<img src="file:////Volumes/Mac/Users/cs/Library/Caches/FileMaker/ContainerCache/Interactive_Container_Webviews/5B5E9D4AA552A54E72180807B75548D6/LocalThumbs/45/7D/15B8790A/D6942AC2/400D5012/8E5DW/968x645.jpg" width="484" height="323" style="position:absolute; top:53px; left x;">

</body>

</html>

x;">

</body>

</html>

We get the html text using the

WebView.GetHTMLText. Please define a name for the container control, so you can reference it later in the

Webviewer calls.

(more)

Da ich für meine Firma viel Papierkram machen muss, hab ich letztes Jahr zwei Petitionen beim Bundestag eingereicht um die Arbeit etwas einfacher zu machen:

Petition 68061: Umsatzsteuer - Erhöhung der Grenze für Kleinstrechnungen in der UStDV

Petition 68062: Einkommensteuer - Grenzen für geringwertige Wirtschaftsgüter anpassen

Die erste zielt auf die Anforderungen an die Rechnungen. Ist schon oft passiert, dass wir Quittungen hatten über 150 Euro. Wenn man die Vorsteuer ziehen will, dann braucht man eine Rechnung mit der Anschrift vom Leistungsempfänger. Allerdings fehlt der oft und dann ist die Rennerei lästig. Wäre schön, wenn die Grenzen mal angepasst werden, so dass wir weniger oft bei Firmen nach einer ordentlichen Rechnung fragen müssen.

Beim zweiten geht es um die Abschreibungen. Die GWG Grenze liegt seit 60 Jahren bei 800 Mark, heute 400 Euro. Ich würde es begrüßen, wenn die Grenze mal angehoben wird um man direkt Sachen bis 800 oder 1000 Euro abschreiben kann ohne lange sich um die Abschreibung zu kümmern. Es gibt zwar die Möglichkeit GWGs als Paket über Jahre abzuschreiben, aber das ist ja auch Aufwand für die Buchhaltung.

Vielleicht habt ihr 5 Minuten um das mit zu zeichnen?

Gibt auch noch andere gute Petitionen auf

epetitionen.bundestag.de.

You may know that we have CLGeocoder functions in our plugins to query geo coordinates for addresses on Mac. But some users need it cross platform and I recently implemented a sample script for an user.

So first get a Google Account. It's always good to separate this, so best may be to make a new account for a project where you need maps. Best on the client, so Google knows where to complain about abuse.

Than go to the

developer page for the google maps api. There you can

request a new API key. Please copy the API key into the field of my example database. Or just hard code it in the script. Although for client projects, it's always good to have a preferences layout where you can set global settings like this and you don't need to check all scripts whether they use the key.

The script starts a CURL session, encodes the address to query into the URL and runs the query. The JSON is put in a field and the interesting values are extracted: Latitude, longitude and formatted address. Please check the script:

#Start new session

Set Variable [$curl; Value:MBS("CURL.New")]

#Set URL to load (HTTP, HTTPS, FTP, FTPS, SFTP, etc.)

Set Variable [$result; Value:MBS("CURL.SetOptionURL"; $curl; "https://maps.googleapis.com/maps/api/geocode/json?address=" & MBS("Text.EncodeToURL"; Substitute(Google Maps API::Address to query; ¶; ", "); "utf8") & "&key=" & Google Maps API::API Key)]

#RUN now

Set Field [Google Maps API::Result; MBS("CURL.Perform"; $curl)]

#Check result

Set Field [Google Maps API::JSON; MBS("CURL.GetResultAsText"; $curl; "UTF8")]

Set Field [Google Maps API::Debug Messages; MBS("CURL.GetDebugAsText"; $curl)]

Set Variable [$httpResult; Value:MBS("CURL.GetResponseCode"; $curl)]

Set Variable [$status; Value:MBS( "JSON.GetPathItem"; Google Maps API::JSON; "status"; 1 )]

If [$status = "OK"]

Set Field [Google Maps API::Latitude; MBS( "JSON.GetPathItem"; Google Maps API::JSON; "results¶0¶geometry¶location¶lat"; 1 )]

Set Field [Google Maps API::Longitude; MBS( "JSON.GetPathItem"; Google Maps API::JSON; "results¶0¶geometry¶location¶lng"; 1 )]

Set Field [Google Maps API::Found; MBS( "JSON.GetPathItem"; Google Maps API::JSON; "results¶0¶formatted_address"; 1 )]

Else

Set Field [Google Maps API::Latitude; ""]

Set Field [Google Maps API::Longitude; ""]

Set Field [Google Maps API::Found; ""]

End If

#Cleanup

Set Variable [$result; Value:MBS("CURL.Cleanup"; $curl)]

I hope this helps you. The example database will be in the next plugin release. Or email me if you need a copy today.

Für alle FileMaker Anwender im Raum Hamburg:

Neujahrsempfang mit Sekt & Fingerfood

am 28. Januar 2017, 11.00 Uhr

Liebe Freunde des FileMaker Magazins,

jeder Jahreswechsel bringt neuen Schwung mit sich und der Blick richtet sich wieder erwartungsvoll nach vorn. Wir freuen uns auf das neue Jahr in der FileMaker Welt – am liebsten mit Ihnen zusammen!

Deshalb laden wir Sie herzlich zu einem kleinen Sektempfang in unsere Verlagsräume in Hamburg-Ottensen ein.

Bitte lassen Sie uns vorher wissen, ob wir uns auf Ihre Teilnahme freuen dürfen.

Auf ein schwungvolles, erfolgreiches und positives neues Jahr!

Klemens Kegebein

und das Team des K&K Verlags

Dieses Jahr komme ich auch nach Hamburg und zum Empfang. Man sieht sich!

Nickenich, Germany - (January 23rd, 2017) -- MonkeyBread Software today is pleased to announce

MBS FileMaker Plugin 7.0 for Mac OS X, Linux and Windows, the latest update to their product that is easily the most powerful plugin currently available for FileMaker Pro. As the leading database management solution for Windows, Mac, and the web, the FileMaker Pro Integrated Development Environment supports a plugin architecture that can easily extend the feature set of the application. MBS FileMaker Plugin 7.0 has been updated and now includes over 4400 different functions, and the versatile plugin has gained more new functions:

New functions help you read details from X509 certificate files. You can read PKCS12 files and extract public and private keys as well as additional certificates. You can than write keys or certificates as PEM files and use them with our

CURL functions.

Our new

XML functions help you find nodes and attributes in XML text. You can extract text and subtrees. To process XML efficiently you can let the plugin read XML and put values in local variables in your script.

For

CURL we added new functions to batch download files via FTP. Of course you can still query yourself the list of files via plugin in a directory and than download several files one after each other. But now we have options to do this all in one transfer and with the use of wildcards to specify which files to fetch.

Using the new function

FM.AllowFileDragDrop you can allow users to drag and drop container with files from FileMaker to other applications, e.g. the Finder. And if needed you can switch this on/off on layout changes.

For

DynaPDF we added new functions to query or set raw content of a page. You can rotate page templates and add page links or watermark annotations to your PDF pages.

As FileMaker on Mac is now 64-bit in most cases, we include an Apple Script to create 32 or 64-bit only plugin. The MBS Plugin in our plugin download is for both and you can split it to get a smaller plugin file if needed.

The functions to work on

Word files have been improved. They should now be able to replace tags with multi line texts. And if you have a table in your template, we can now remove rows there, too.

When you run SQL queries in FileMaker using our

FM.SQL.Execute functions, you can later query result as text. Or we provide the results properly encoded for CSV export.

We improved

PrintDialog,

PortMidi,

LDAP,

Audit,

ImageCapture,

SerialPort line reading,

SmartCard,

SQL functions and more. DynaPDF is updated to 4.0.8.19, LibXL to version 3.7.2 and SQLite to 3.16.2.

See

release notes for a complete list of changes.

23. Januar 2017 - Monkeybread Software veröffentlicht heute das

MBS Plugin für FileMaker in Version 7.0, mit inzwischen über 4400 Funktionen eines der größten FileMaker Plugins überhaupt. Hier einige der Neuerungen:

Mit neuen Funktionen können Sie jetzt Details aus X509 Zertifikate lesen. Sie können PKCS12 Dateien öffnen und die privaten und öffentlichen Schlüssel auslesen oder weitere enthaltene Zertifikate. Sie können dann die Schlüssel in PEM Dateien schreiben und Sie mit den

CURL Funktionen benutzen.

Unsere neuen

XML Funktionen helfen Ihnen XML Knoten und Attribute im XML Text zu finden. Sie können dann Texte und Teilbäume extrahieren. Um XML effizient zu verarbeiten können sie das XML einlesen lassen um automatisch Variablen für ihr Skript zu definieren.

Für

CURL haben wir neue Funktionen für automatisierte Downloads von mehreren Dateien via FTP. Natürlich können Sie weiterhin selber per Plugin die Liste der Dateien abfragen und eine Datei nach der anderen runter laden. Aber jetzt haben wir Optionen um das automatisch in einem Transfer zu machen. Sie können ein Suchmuster angeben um nur bestimmte Dateien zu laden, zum Beispiel alle mit einer bestimmten Dateinamenerweiterung.

Über die neue Funktion

FM.AllowFileDragDrop können Sie Drag & Drop erlauben für Container mit Dateien. So ziehen Sie Dateien aus FileMaker direkt auf den Schreibtisch oder in andere Programme. Natürlich kann man das pro Layout an/abschalten.

Für

DynaPDF haben wir neue Funktionen um die Rohdaten von einer PDF Seite abzufragen oder zu setzen. Vorlagen für PDF Seiten können Sie jetzt drehen und Seitenverweise oder Wasserzeichen zu PDF Seiten hinzufügen.

Da FileMaker für Mac jetzt meistens in 64-bit läuft, liegen dem Plugin AppleSkripte bei mit denen Sie Plugins für 32-bit oder 64-bit erstellen können. Das MBS Plugin im Download enthält 32-bit und 64-bit Unterstützung und beim Aufteilen bekommen Sie eine kleinere Plugindatei.

Die Funktionen für

Word Dateien wurden verbessert und Sie können jetzt mehrzeiligen Text ersetzen. Wenn Sie in der Vorlage eine Tabelle haben, können Sie jetzt extra Zeilen löschen.

Wenn Sie SQL Abfragen über die

FM.SQL.Execute Funktion laufen lassen, können Sie jetzt auch das Ergebnis einfach als Text bekommen. Oder für einen CSV Export kann das Plugin die Texte passend kodieren.

Verbessert wurde außerdem die Funktionen zum

PrintDialog,

PortMidi,

LDAP,

Audit,

ImageCapture,

SerialPort mit lesen in Zeilen,

SmartCard,

SQL Funktionen und mehr. DynaPDF kommt jetzt in Version 4.0.8.19, LibXL in Version 3.7.2 und SQLite in Version 3.16.2.

Alle Änderungen in den

Release Notes.

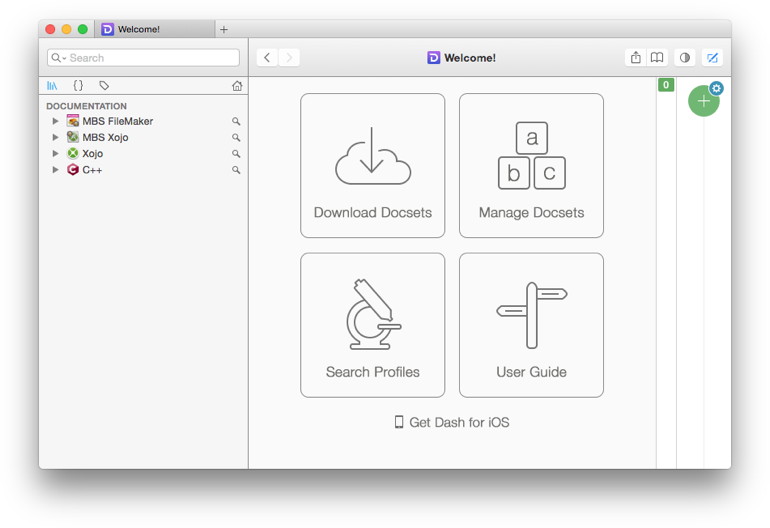

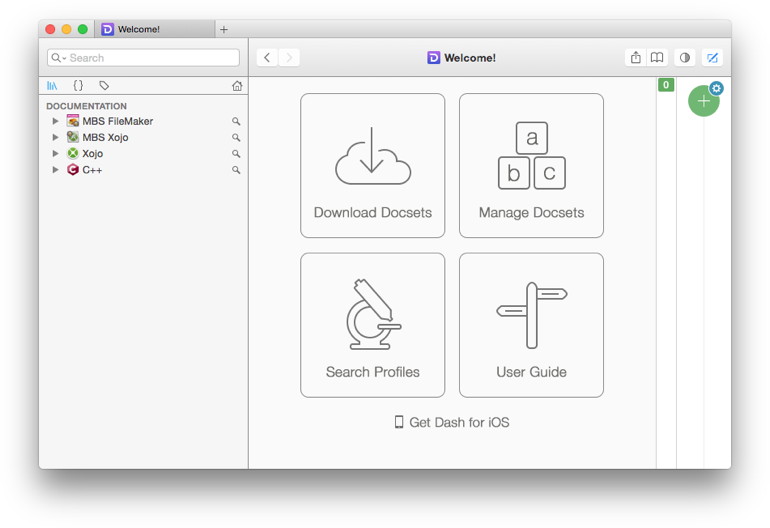

Just updated the archives for the

Dash application. Does the auto update work for you?

So here you can click to launch Dash and install our plugin help:

MBS Xojo Plugins and

MBS FileMaker Plugin

You find the docset links also on our reference websites where you can download archive manually if needed:

MBS FileMaker Plugin Documentation for Dash and

MBS Xojo Plugins Documentation for Dash

Feedback is welcome.

Auch 2017 biete ich wieder eine MBS FileMaker Schulung auf Deutsch an.

Am Tag vor der

FileMaker Konferenz in Salzburg, am 11. Oktober 2017 (Mittwoch) von ca. 9 bis 17 Uhr.

- Neues im MBS Plugin.

- Rundgang durch Beispiele

- MBS Plugin verwenden mit FileMaker Cloud

- MBS Plugin verwenden mit FileMaker iOS SDK

- Erweiterungen im Skript Workspace

- Beliebte Pluginfunktionen

- FTP/SFTP Up/Download

- Webservices einbinden

- Bildbearbeitung

- Barcodes generieren und erkennen

- Arbeiten mit dem Webviewer

- SQL Anfragen in FIleMaker oder an andere Datenbanksysteme

- Zeit für Fragen

Anmeldung bei mir. Kosten 99 Euro + MWSt. inkl. Mittagessen und Kaffeepausen.

Bitte Umsatzsteuer ID angeben bei

Anmeldung,

Bezahlen.

Oder melden Sie sich bei der

Denkform an für den MBS Workshop am 2. März und 7. Dezember 2017 in Hofheim (Taunus).

New in this prerelease of the 7.0 MBS FileMaker Plugin:

Download at

monkeybreadsoftware.de/filemaker/files/Prerelease/ or ask for being added to the dropbox shared folder.

See you in Phoenix Arizona for the

FileMaker Developer Conference, 24th to 27th July 2017.

The ticket price is down to $899, but you also get only 2 conference days plus a day for keynote and/or training. Hotel $149/night.

FBA members get an extra day.

There was a question on how set field background color in FileMaker based on a color in another field:

So we define a field which gets a color using formatting rules. The other field defines the hex color code to use. To keep it smaller, we only allow 4096 colors: Those where the two digits per color channel are equal. So from 000000 to FFFFFF with steps of 11. e.g. put FF0000 here to make field background red.

To generate the conditional formatting rules, we use a Xojo app:

The workflow is like this:

- Cut or copy a field in layout mode in FileMaker.

- Go in the app and type the right field name for the condition.

- Press the button to add formatting rules.

- Paste field in layout with colors.

Please try it. To change/build the Xojo app, please download Xojo on the

Xojo website. For just running the project with your custom modifications in the IDE, you won't need to buy a license.

Download test database and project here:

FieldColor.zip

FileMaker Inc today released FileMaker Pro 15.0.3, an update to address bug fixes and various compatibility issues.

You can read in the knowledge base about

FileMaker Server 15.0.3 (6 fixes),

FileMaker Pro (Advanced) 15.0.3 (6 fixes) and

FileMaker Go 15.0.3

As usual FileMaker provides updaters only for Windows and we miss the ones for Mac. So here are the links for Mac:

FileMaker Advanced 15.0.2 to 15.0.3:

https://productupdates.filemaker.com/pro/15.0.2/release/fmpa.15.0.3.305.updates.pkg.zip

FileMaker Pro 15.0.2 to 15.0.3:

https://productupdates.filemaker.com/pro/15.0.2/release/fmp.15.0.3.305.updates.pkg.zip

Be sure to only apply them when you have the right 15.0.2 version before and FileMaker is not running.

On the weekend of March 18-19, 2017, the first ever

curl meeting is taking place is Nuremberg, Germany.

Users, developers, binding authors, application authors, curl maintainers, libcurl hackers and other people with a curl interest are welcome!

I will try to be there and finally meet the curl people. I've been using curl for various projects including Xojo and FileMaker plugins for over 10 years.

New in this prerelease of the 7.0 MBS FileMaker Plugin:

- Changed Audit: CurrentTimeStamp, CurrentTime and CurrentDate. Those can now be TimeStamp, Time, Date instead of Text fields.

- Changed Audit: FieldID, TableID and RecordID can now be number fields instead of text fields.

- Fixed progress dialog updates for macOS Sierra with CURL synchronous upload.

- Fixed an issue with PrintDialog functions and Mac OS X 10.6.

- Enabled script colors for German script editor for FileMaker 13 (broke in 6.5).

- Added PrintDialog.GetReset and PrintDialog.SetReset.

- Added progress window option to Files.CopyFile and Files.MoveFile for Windows.

- Changed SQL plugin to detect ODBC to FileMaker and handle that better for reading text fields and avoid a crash.

- Added enable parameter FM.AllowFileDragDrop, so you can disable/enable it when needed.

Download at

monkeybreadsoftware.de/filemaker/files/Prerelease/ or ask for being added to the dropbox shared folder.

As you may know you can use CURL functions in MBS Plugin to send emails. We include examples to show you how to send with attachments, html text and inline graphics. A recent example coming with 7.0pr2 showed you how to batch send emails. But that example sends emails one by one and each time with a new connection. I already improved the example here to reuse connections which helps a lot on speed. Still the big problem is that network transfers with uploads take time. The script waits while the Kilobytes for the email go through the network cables.

As you may know you can use CURL functions in MBS Plugin to send emails. We include examples to show you how to send with attachments, html text and inline graphics. A recent example coming with 7.0pr2 showed you how to batch send emails. But that example sends emails one by one and each time with a new connection. I already improved the example here to reuse connections which helps a lot on speed. Still the big problem is that network transfers with uploads take time. The script waits while the Kilobytes for the email go through the network cables.

Background processing

Luckily our plugin offers a function for cURL to run a transfer in background: CURL.PerformInBackground. Using this function we can start a cURL transfer and the script continues. We can prepare and send the next email while the last one is still uploading. Now you need to be careful and manage the connections and their status. To show you how this can work, we show you what we did in our updated example. Here is an excerpt from the script where we look for a free cURL session:

...

#Find a non busy curl connection

Set Variable [$index; Value:1]

Loop

If [$curls[$index] = ""]

#found free index, setup new CURL session

Set Variable [$curl; Value:MBS("CURL.New")]

Set Variable [$curls[$index]; Value:$curl]

Set Variable [$r; Value:MBS("CURL.SetOptionURL"; $curl; "smtp://" & $SMTPServer)]

Set Variable [$r; Value:MBS("CURL.SetOptionUsername"; $curl; $SMTPUser)]

Set Variable [$r; Value:MBS("CURL.SetOptionPassword"; $curl; $SMTPPass)]

...

Exit Loop If [1]

Else

#found used index

If [MBS( "CURL.IsRunning"; $curls[$index] ) = 0]

#found used index which is done

Perform Script [“HandleFinishedCURL”; Parameter: $curls[$index]]

Set Variable [$curl; Value:$curls[$index]]

Exit Loop If [1]

End If

End If

Set Variable [$index; Value:$index + 1]

If [$index = 9]

#Wait

Set Variable [$index; Value:1]

Pause/Resume Script [Duration (seconds): ,01]

End If

End Loop

...

(more)

MedinCell S.A. is a company located in Montpellier in southern France near the Mediterranean Sea.

They use my plugins for a few years now and are looking for another Xojo developer:

(more)

On the weekend we run into the issue that you can store files in a container, but you can't simply drag them into other applications or to the Finder.

To enable the desired behavior with a new plugin function called

FM.AllowFileDragDrop.

The plugin than intercepts the drag and drop in FileMaker and writes the data into a temp file, so the drag includes a file reference and does work with Finder and other applications.

Watch the video:

AllowFileDrag

Requires MBS FileMaker Plugin 7.0pr2 plugin or newer.

New in this prerelease of the 7.0 MBS FileMaker Plugin:

Download at

monkeybreadsoftware.de/filemaker/files/Prerelease/ or ask for being added to the dropbox shared folder.

Registration just started for the

FileMaker Conference .fmp[x]Berlin 2017.

This conference is organized by

Egbert Friedrich and takes place from 1st to 3rd June 2017 in Berlin, Germany.

As the conference is in english, this is your chance to meet people from around the world at a conference in Europe. And for a lot of people it's easier to get to Berlin than to cross the atlantic and deal with US immigration officers.

Who

dotfmp is an effort of various leading European FileMaker Developers. It is meant to bring all kinds of higher level developers together to share knowledge, educate and challenge each other.

When

dotfmp starts at the 31 May 2107 in the later afternoon with a relaxed "Beer and Sausages" in one of the most famous Berlin Beergarden.The session days itself last from 1 until 3 June with various socialising events in the evenings. Additionally we offer suport on 4 June to see more from Berlin and its surroundings.

What

dotfmp is a 3 Day-Unconference, Meetup, Hangout or Barcamp. It is an informal and self-organized effort to meet on a personal base.

Where

dotfmp takes place in one of the most famous spots in Berlin. The GLS Campus is located in a vibrant area with a very short walking distance to all the famous restaurants.

Why

We feel there are far too few possibilities to talk to, learn from, and hangout with fellow developers in a relaxed and informal environment. And we'd like to share work and get feedback from people chewing on similar challenges.

If you like to join the conference and present something, please

register soon.

Next Thursday, 12th January 2017, I will visit the regular FileMaker meeting in Saarbrücken.

If you like to join us, please sign up on the website

filemaker-stammtisch-saarlorlux.de.

Feel free to ask me about our MBS FileMaker Plugin. Or lets talk about upcoming conferences?

How FileMaker Pro can log changes

Since 2012, the MBS plugin has an audit functions. With version 6.5 they got much faster and therefore you may want to take a closer look on our audit functions. In FileMaker Pro, you can use them to easily log all changes to the database. Later you can inspect who changed records and use the log to undo actions or display old values.

Audit with MBS

We start with a test database. For the example, we take the starter solution called event management. The audit function need an AuditLog table and a matching layout. Both of these should be available somewhere and can be in a different database. The MBS plugin looks into the AuditLog layout to determine which fields should be written. The records are then written internally using

SQL commands. Therefore, there must be only a relationship to an AuditLog table, even if it is located in a different database. This is quite interesting for server-based solutions, if the log is split into several AuditLog tables and the relationships define which table logs into which AuditLog table.

We add fields to the AuditLog table. The following fields are required: FieldName, FieldHash, TableName and RecordID. The name for the field and the table define the field exactly. We know which record was changed via the RecordID and FieldHash is then the hash of the field value. The field value can be very long and can, but does not have to be written down.

The following fields can be defined:

FieldValue, FieldOldValue, FieldType, UserName, IP, CurrentTimestamp, TimeStamp, CurrentTime, CurrentDate, Action, CurrentHostTimeStamp, PrivilegeSetName, AccountName, LayoutNumber, ApplicationVersion, FileName, HostApplicationVersion, HostName, HostIPAddress, LayoutName, PageNumber, LayoutTableName, TableID, FieldID, ScriptName and WindowName.

In FieldValue, the plugin saves the new value. In the FieldOldValue field, it saves the old value of the field. This is, of course, only available if the plugin finds the older entry. FieldType saves the type of the field, for example text. UserName saves the user name and AccountName the current account name. The CurrentTimestamp, CurrentHostTimeStamp, TimeStamp, CurrentTime, and CurrentDate fields store all the current time and/or current date. Hostname and HostIPAddress define on which computer the change was made. LayoutName and LayoutNumber which layout was used, WindowName which window. TableID and FieldID store the IDs for the table and the field. Later you can then find values by IDs even faster.

Create an Audit table

So let's put a table called AuditLog and the fields FieldName, FieldHash, TableName, RecordID, FieldValue, FieldOldValue, FieldType, UserName, IP, CurrentTimestamp, Action, FileName and LayoutName. We also add an additional field to EventID. Because we can always save the ID of the event and then find the changes for an event. FileMaker automatically creates a layout for the new table. This layout is required by the current plugin to find the fields in the table. We can later see in the layout what was logged. Of course, regular users may not need to see the audit table or the layout.

You can create additional fields in the AuditLog table. For example, a LogTime field with the data type time stamp for the current time of logging. This field can automatically be filled by FileMaker. You can also pass these additional fields later using an audit call, for example, to log a variable.

Audit fields

In each table, we create an AuditTimeStamp field. This field is automatically set by FileMaker to the current time stamp when the record changes. If you already have a field with a different name, you can also use it and do not need a field with a redundant time stamp.

We also create a second field called AuditState. This will be a calculated value, which needs to be recalculated each time. So please uncheck the "Do not replace existing value of field" checkbox. The calculation is the call to

Audit.Changed. This function has several parameters. First, we pass our time stamp, behind the name of the table itself, in this case events. Then we can specify fields that should be ignored. We can also use field name and | as a separator to fill a field with a given value. So, we set the value of the EventID field to the value of the EVENT ID MATCH FIELD field. Finally, we pass the label from the field "Task Label Plural" as this field should not be logged. This field is calculated easily from the other fields. Thus, the audit call looks like this:

MBS ("

Audit.Changed"; AuditTimeStamp; "Events"; "EventID|" & EVENT ID MATCH FIELD; "Task Label Plural");

For the other tables, the calls would be:

MBS ("

Audit.Changed"; AuditTimeStamp; "Contributors"; "EventID|" & EVENT ID MATCH FIELD)

MBS ("

Audit.Changed"; AuditTimeStamp; "Tasks"; "EventID|" & EVENT ID MATCH FIELD)

MBS ("

Audit.Changed"; AuditTimeStamp; "Agenda"; "EventID|" & EVENT ID MATCH FIELD)

MBS ("

Audit.Changed"; AuditTimeStamp; "Guests"; "EventID|" & EVENT ID MATCH FIELD)

So the plugin logs all the changes for the events. You can open the windows side by side, one with the events layout and one with the layout for the AuditLog table. For changes to the portals you have to click on a free space in the event to write the changes to the record. This writes the changes for all portals together. In the AuditLog, we see many new entries for the new records.

Positive or negative?

There are two

Audit.Changed commands in the plugin.

Audit.Changed takes the field names that are not to be observed. On the other hand,

Audit.Changed2 is different and takes a positive list of fields. Basically,

Audit.Changed2 is faster because it does not have to query which fields are available. Form fields or unsaved calculations are not monitored by default, since these are easy to recalculate.

With the

Audit.SetIgnoreCalculations function, you can completely disable the monitoring of all calculation fields. With

Audit.SetIgnoreSummaryFields, you can ignore the summary fields. Or with

Audit.SetIgnoreUnderscoreFieldNames all fields with underline at the beginning of the word. You can also use

Audit.SetIgnoredFieldNames to globally specify a list of field names for fields that you want to ignore. by default the plugin ignores the AuditState and AuditTimeStamp fields.

Display the audit

We can display the audit data for the record. Perhaps not for every user, but the display is always useful as a change log. To do this, create a relationship between Events::EVENT ID MATCH FIELD and AuditLog::EventID in the relationship diagram.

Now create space in the event layout and create a portal for the AuditLog table. If it is not found in the reference records pop-up menu, you have created the relationship above incorrectly. You could sort the fields down by CurrentTimeStamp. It is enough to take the fields FieldName, FieldValue and perhaps UserName.

Audit on deletion

If you want to log the deletion, you have two options. Either you let the user delete only by script and you always notify the plugin before deleting, or you use the security settings in FileMaker for a delete trigger.

The call from the plugin would then be via the

Audit.Delete function:

MBS ("

Audit.Delete"; MyTable::AuditTimeStamp; "MyTable")

The time stamp is passed, but not really used. Instead of using MyTable, you specify the current table from the current record. The plugin logs the record as with the other calls, so all the changes to the fields if needed and then an entry for deletion. You can of course do this in a script shortly before the delete command (with variable set).

Test users

First we need a test user to delete. In the Security dialog, we create a new user. We create a new set of permissions for this user. Best way is to duplicate and customize the permissions from the data input. In the settings for the calculation, there is a pop-up menu for records in the upper left corner. There, select "Custom privileges ...". A suitable dialog appears, where you can set the permissions for each table. For each table, select "Limited ..." instead of Yes. A new dialog box appears for the calculation of the limitation. Here, please insert the plugin call, for example for the events table:

MBS ("

Audit.Delete"; AuditTimeStamp; "Events")

For testing, we are building a script called login. This script gets only one command: Re-Login [With dialog: On]. If everything works out, you can use the new script to log in again with the new user. There you create a new data record. Make sure you commit some changes. You will see the recording in a second window with the AuditLog table. If you delete the record now, the calculation will be executed and the record should be marked as deleted. Please note that FileMaker first performs the calculation and then displays a dialog. If the user presses Cancel there, the plugin has already set an entry and the record still remains undeleted.

Experience

Many plugin users use audit. Because FileMaker often stores changes, each database should have an audit. Easily a record is changed or deleted and no one knows later who has made what and why. In addition, you can offer an undo feature, if you can find what was previously in a field.

The table with AuditLog is growing quickly, because a record is created for each change. However, you can easily rotate the table, for example change it monthly. The AuditLog table and layout must be available only in an open file. Therefore, it is recommended to put the AuditLog table in another database file. You can even have different audit databases for different files and separate the log data. Thus, a table can log into an audit file and another table logs to another file. For this, however, the relationships must be true, so that under the name AuditLog is a reference in the relationships that point to the corresponding table. Those audit log tables have of course different names.

The whole auditing works at best, when the users don't know about it. If an employee then makes a mistake, a supervisor can undo the change. Or if the data does not agree, who has done what and when.

In version 6.5, we have significantly improved

SQL statements for audit, so the new plugin is much faster in the network. For each change, we need to look for old entries in the AuditLog and see if the values have changed.

Give it a try!

As you may know we have an example for FileMaker to convert from MarkDown to HTML via JavaScript library in a webviewer.

For a client I just converted that example to Xojo.

On Mac OS X it looks like this:

and for Windows:

This is using Internet Explorer on Windows and WebKit on Mac.

Both ways we load a local html file which contains two text areas in a html form. We fill the first text control with the input text, call a javascript function and read the output via the second form field. This way we can pass much more multi line text than we ever could through URLs.

Please try it. Will be included in next prerelease or if you need now, drop me a line by email.

There is an update to SQLite.

The new

version 3.16.1 was released yesterday and I already updated plugins here.

3.16.x is faster, fixes a few bugs and has some new shell commands.

If you need a copy now, you can email me as usual.

For our German speaking users:

Wir haben die Artikel zum MBS Plugin aus dem FileMaker Magazin gesammelt hier online gestellt: FileMaker Magazin Artikel:

- FMM 201606: Word-Dateien ausfüllen, Ein Tipp zum MBS-Plugin

- FMM 201605: Das MBS-Kontextmenü, Script-Schritte schneller aktivieren/deaktivieren

- FMM 201604: Webservices in FileMaker einbinden, Verwendung von CURL-Befehlen im „MBS-Plugin“

- FMM 201603: 4000 Funktionen in zehn Jahren, Ein kleiner Rückblick auf das MBS-Plugin

- FMM 201601: iOS App SDK, Eigene iOS-Apps auf Basis von FileMaker

- FMM 201506: Authentizität durch Signaturprüfung, Daten übertragen und Veränderungen bemerken

- FMM 201505: Wünsche werden wahr, Neues im „MBS-Plugin“ bei der FMK 2015

- FMM 201504: Datensätze effizient kopieren, Mit etwas SQL und dem MBS-Plugin

- FMM 201503: Neue Datensätze ohne Layoutwechsel, Mit Hilfe von SQL-Befehlen und dem MBS-Plugin

- FMM 201501: QuickList, Schnelle Listen für FileMaker

- FMM 201405: E-Mail-Versand, Mehr Möglichkeiten mit dem MBS Plugin

- FMM 201405: Vorbereiten von PDFs für den Versand, Verkleinern großer Dateien

- FMM 201402: Variablen, Globalisierte Lösungen per Plugin

- FMM 201401: Kalendertermine und Erinnerungen, Wie man Ereignisse aus FileMaker anlegt

- FMM 201306: Zip-Archive auslesen, Bilder aus OpenOffice-Dokumenten extrahieren

- FMM 201303: Script-Träger übers Netzwerk, Scripts triggern übers Netzwerk

- FMM 201303: Syntax Coloring, Berechnungen und Scripts bekennen Farbe

- FMM 201203: Arbeiten mit Dateidialogen, Komfortablere Dateiexporte per MBS Plugin

- FMM 201105: Bilder skalieren mit dem MBS FileMaker Plugin, So bleibt Ihre Datenbank schlank

Wir empfehlen allen FileMaker Anwender ein Abo vom Magazin und den Kauf der alten Ausgaben. Das FileMaker Magazin ist eine excellente Quelle von Informationen, Anleitungen und Profitips.

New in this prerelease of the 7.0 MBS FileMaker Plugin:

- Added GMImage.DestroyAll function.

- Fixed a problem with WordFile not finding tag if it is the last text in a document.

- Fixed a crash with opening Midi devices on Windows in 64-bit application.

- Added PortMidi.GetDeviceID and PortMidi.GetDeviceName functions.

- Added XML helper functions: XML.ExtractText, XML.NodeNames, XML.SetVariables, XML.ListAttributes, XML.HasAttributes, XML.GetAttributes and XML.SubTree.

- Added functions to read PKCS12 and X509 certificates.

- Added more checks in CURL functions to avoid changing script triggers while transfer in use.

- Added SerialPort.HasLine and SerialPort.ReadLine functions.

- Changed returning text, so that many functions returning text with given encoding can now return hex and base64 if you pass hex or base64 as encoding.

- Changed WordFile.ReplaceTag to create multiple paragraphs if text to replace contains multiple lines and is part of a normal text paragraph.

- Added WordFile.RemoveTableRow function.

- Added checks to Audit function to complain if AuditLog layout does not have required fields.

- Added functions for batch download of files over FTP: CURL.GetBatchCurrentFileName, CURL.GetBatchCurrentFilePath, CURL.GetBatchDestinationPath, CURL.GetBatchFileNames and CURL.SetBatchDestinationPath.

- Added DynaPDF.PageLink and DynaPDF.PageLinkEx functions.

- Added DynaPDF.SetLinkHighlightMode and DynaPDF.GetLinkHighlightMode functions.

- Fixed bug in XML.canonical function.

- Changed ImageCapture.requestScan to better report errors, e.g. empty document feeder.

- Updated LibXL to version 3.7.2.

- Added DynaPDF.AddAnnotToPage and DynaPDF.WatermarkAnnot functions.

- Updated DynaPDF to version 4.0.6.17.

- Added DynaPDF.GetContent and DynaPDF.SetContent.

Download at

monkeybreadsoftware.de/filemaker/files/Prerelease/ or ask for being added to the dropbox shared folder.

Did you notice that the interactive Containers in FileMaker are really Webviewers?

Did you notice that the interactive Containers in FileMaker are really Webviewers?

x;">

</body>

</html>

x;">

</body>

</html>

You may know that we have CLGeocoder functions in our plugins to query geo coordinates for addresses on Mac. But some users need it cross platform and I recently implemented a sample script for an user.

You may know that we have CLGeocoder functions in our plugins to query geo coordinates for addresses on Mac. But some users need it cross platform and I recently implemented a sample script for an user.

Für alle FileMaker Anwender im Raum Hamburg:

Für alle FileMaker Anwender im Raum Hamburg:

FileMaker Inc today released FileMaker Pro 15.0.3, an update to address bug fixes and various compatibility issues.

FileMaker Inc today released FileMaker Pro 15.0.3, an update to address bug fixes and various compatibility issues.

As you may know you can use

As you may know you can use

There is an update to SQLite.

There is an update to SQLite.